BlendedMVS

Volumetric shape representations have become ubiquitous in multi-view reconstruction tasks. They often build on regular voxel grids as discrete representations of 3D shape functions, such as SDF or radiance fields, either as the full shape model or as sampled instantiations of continuous representations, as with neural networks. Despite their proven efficiency, voxel representations come with the precision versus complexity trade-off. This inherent limitation can significantly impact performance when moving away from simple and uncluttered scenes.

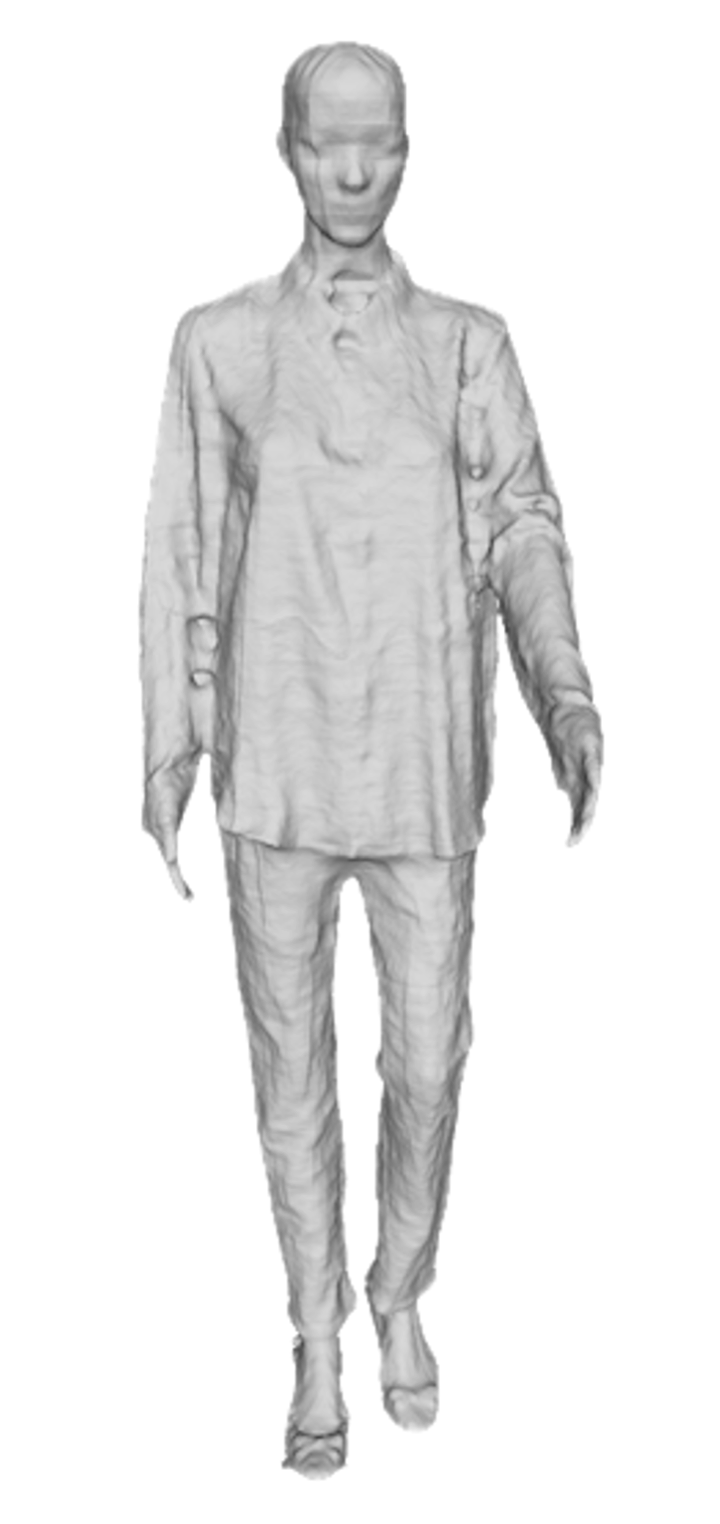

In this paper we investigate an alternative discretization strategy with the Centroidal Voronoi Tessellation (CVT). CVTs allow to better partition the observation space with respect to shape occupancy and to focus the discretization around shape surfaces. To leverage this discretization strategy for multi-view reconstruction, we introduce a volumetric optimization framework that combines explicit SDF fields with a shallow color network, in order to estimate 3D shape properties over tetrahedral grids. Experimental results with Chamfer statistics validate this approach with unprecedented reconstruction quality on various scenarios such as objects, open scenes or human.

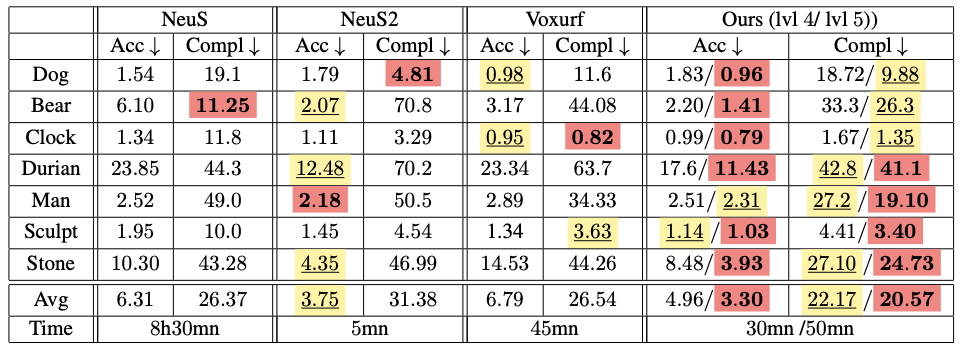

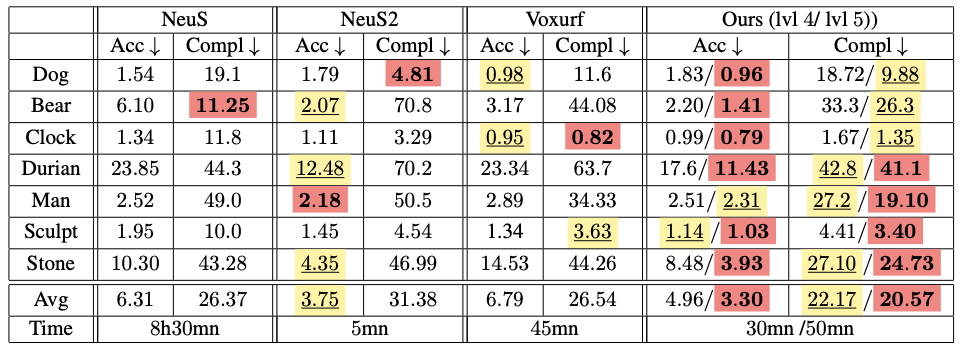

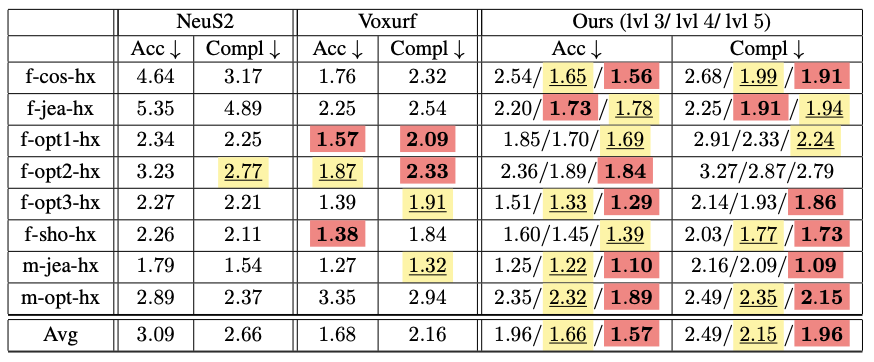

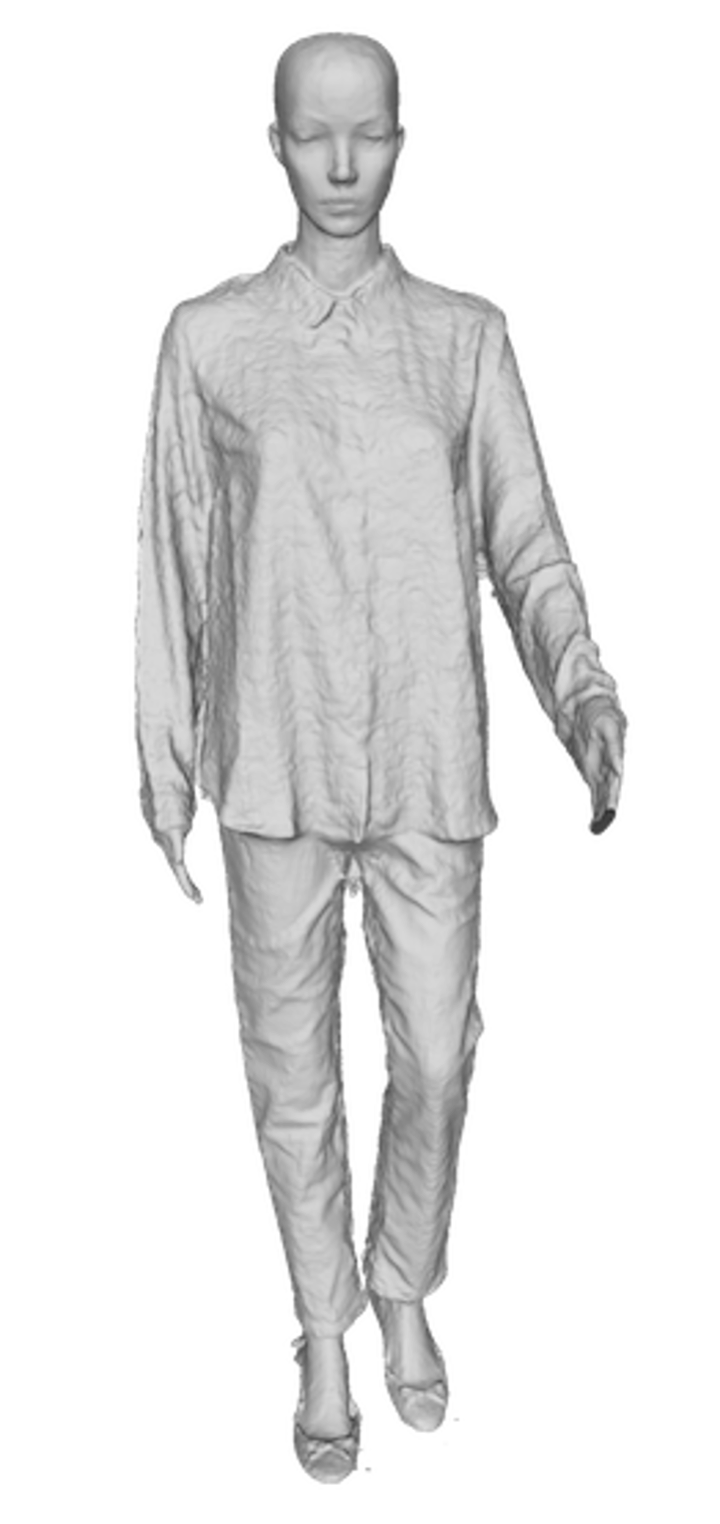

We evaluate the ability of our approach to reconstruct detailed 3D surfaces compared with the state-of-the-art methods NeuS [Wang 2021], Neus2 [Wang 2023] and Voxurf [Wu 2022]. We qualitatively and quantitatively evaluate our method on a subset of the BlendedMVS and a subset of the 4D Human Outfit dataset

@article{thomas2024vortsdf3dmodelingcentroidal,

author = {Diego Thomas and Briac Toussaint and Jean-Sebastien Franco and Edmond Boyer},

title = {VortSDF: 3D Modeling with Centroidal Voronoi Tesselation on Signed Distance Field},

journal = {arXiv},

year = {2024},

}